By Nicole Findlay

Photos by Luther Caverly

Jason Millar is bridging the divide that separates engineering programs and the humanities.

A former engineer, Millar is now a philosophy instructor at Carleton University while also completing post-doctoral research in philosophy at the University of Ottawa’s faculty of law.

Millar’s research examines the ethical implications of advances in robotics and artificial intelligence, sometimes known as robot ethics.

“We are spinning this web of technology, how do we do that and maintain our humanity?” Millar asks.

“What are the questions that would lead us to think that maybe we could lose our humanity in the first place?”

His research focuses on driverless cars, social robotics, and weapons systems—all artificial intelligence technologies that blur the boundary between human and machine. Each of these technologies raise myriad social and ethical questions.

Blurred Boundary Between

Human and Machine

Early in his career as an engineer for a large aerospace engineering firm, Millar helped design electronics assemblies for commercial and military aircraft, and the International Space Station. He received one that stood out from the rest—a “big green donut” that was unlike any of the traditional rectangular assemblies he had encountered. It was part of a guided bomb unit and it brought him up short. It was the first time the ethical implications of his engineering work caused him to question how we think about, design and use technology.

“My engineering education didn’t provide me with any kind of ‘intellectual toolkit’ to deal with that question,” said Millar.

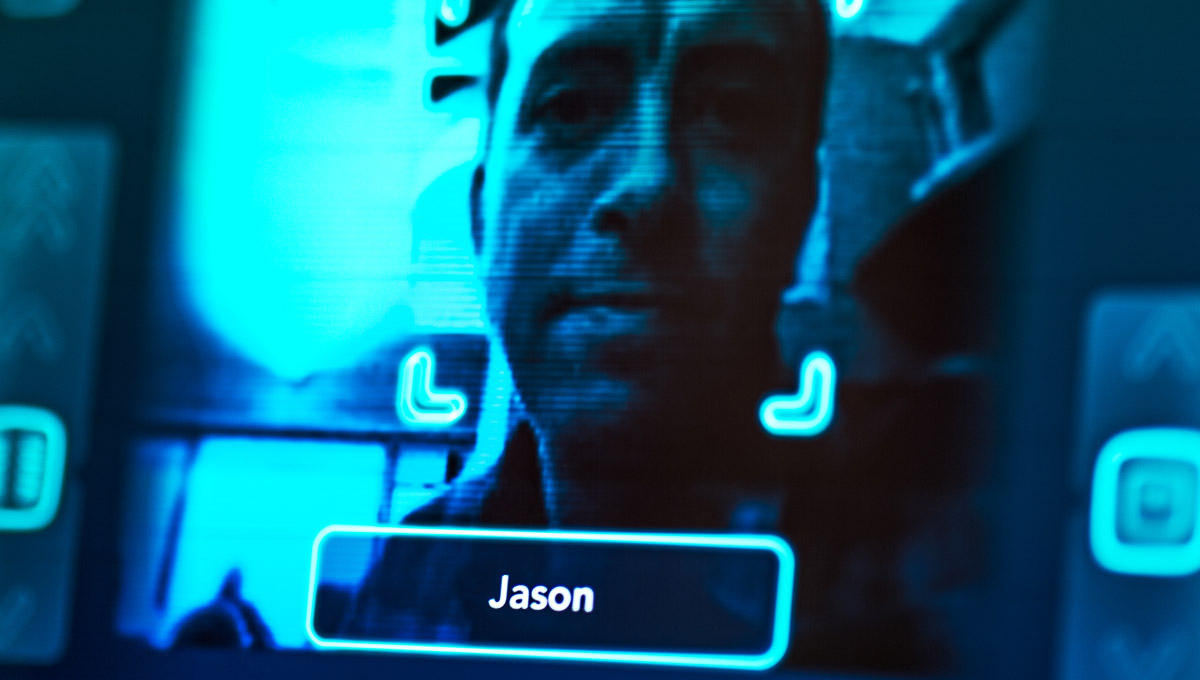

Jason Millar is recognized and identified through the eyes of a robot.

A seemingly inconsequential political philosophy course taken as an elective during his engineering program became the lynchpin linking his design work to the ethical questions it raised. This one course became a catalyst for a career change when Millar left his engineering work to start over—pursuing undergraduate studies and eventually a PhD in philosophy.

Friend or Foe

The dizzying speed at which technology has infiltrated nearly every aspect of our lives requires closer scrutiny as new advances such as Deep Learning confound our expectations of the devices we have come to rely on.

A relatively new advance in artificial intelligence, Deep Learning goes beyond merely programming devices such as driverless cars to drive. Instead, borrowing from the architecture of the human brain, it teaches them how to “learn” to drive, akin to how a human would.

“We are starting to see that the systems surprise their designers,” Millar says.

“They’ll do things that are highly effective in achieving goals that the designers never anticipated.”

He cites the computer program AlphaGo as one example. Designed by Google, AlphaGo confounded and out-maneuvered human players in the highly complex game Go.

Millar says this increased complexity correlates with unpredictability and raises novel questions about responsibility.

Millar’s research untangles thorny ethical questions of responsibility and trust. Who is responsible when the driverless car has an accident—the occupant, the engineer who designed the car, the car? The answer has far-reaching implications, not only for engineers, but for insurance companies, lawyers, manufacturers, policy-makers, and the public. The driverless car is just one of the rapidly evolving technologies that is transforming how humans think about and use technology.

Robot Ethics:

Trusting the Machine

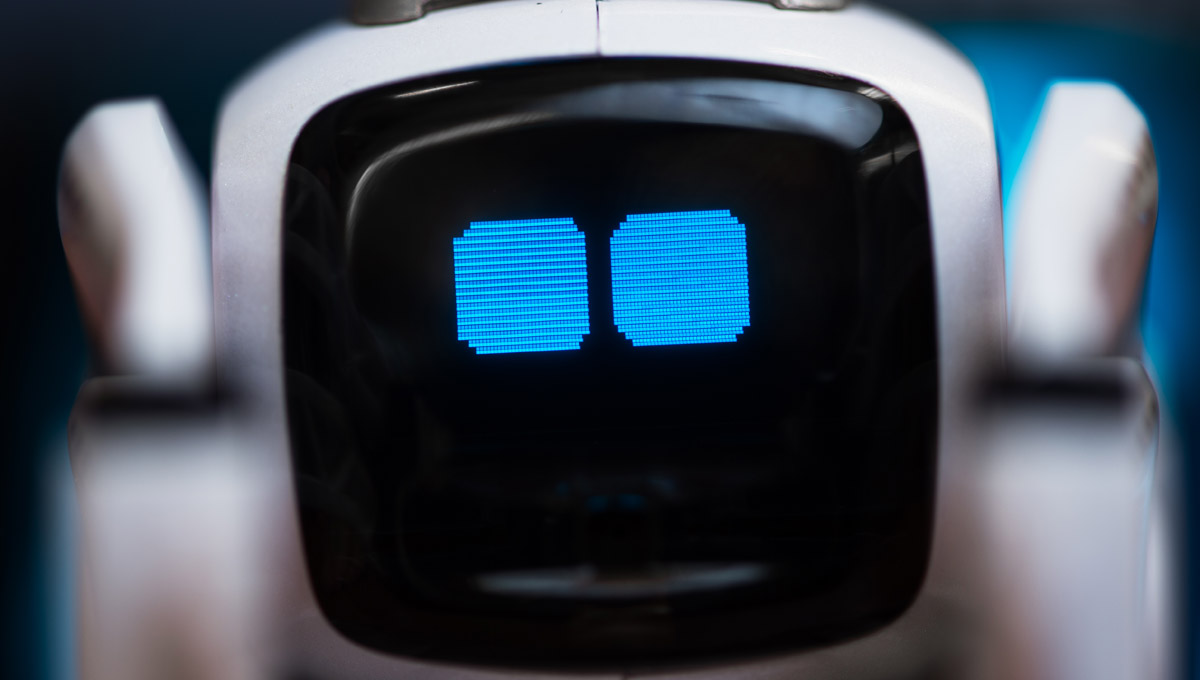

Millar examines our psychological predilection to trust others and to anthropomorphize, or assign human characteristics to the technologies we interact with.

In his research he often encounters “design literature” that addresses “how to make people trust systems” without seriously questioning whether or not the systems themselves are “trustworthy.”

“Philosophy can help articulate what counts as trustworthy in these different kinds of systems.”

This is crucial to ensure that the needs of the designers and the end users of technology are balanced and that our innate tendency to trust is not abused.

Nowhere is this trust more prevalent than in our use of social media platforms. When we post on Facebook or any other social medium, we have certain expectations of the technology. To some degree we place our trust in it, we expect that the privacy settings available to us will provide some measure of protection. Until they don’t.

Millar coined the term “socially awkward technology” to describe our interaction with the technologies that facilitate our social lives.

“Facebook is a little socially awkward. It doesn’t really understand what my expectations are. It violates the norms that I would attach to a really trustworthy mediator,” says Millar.

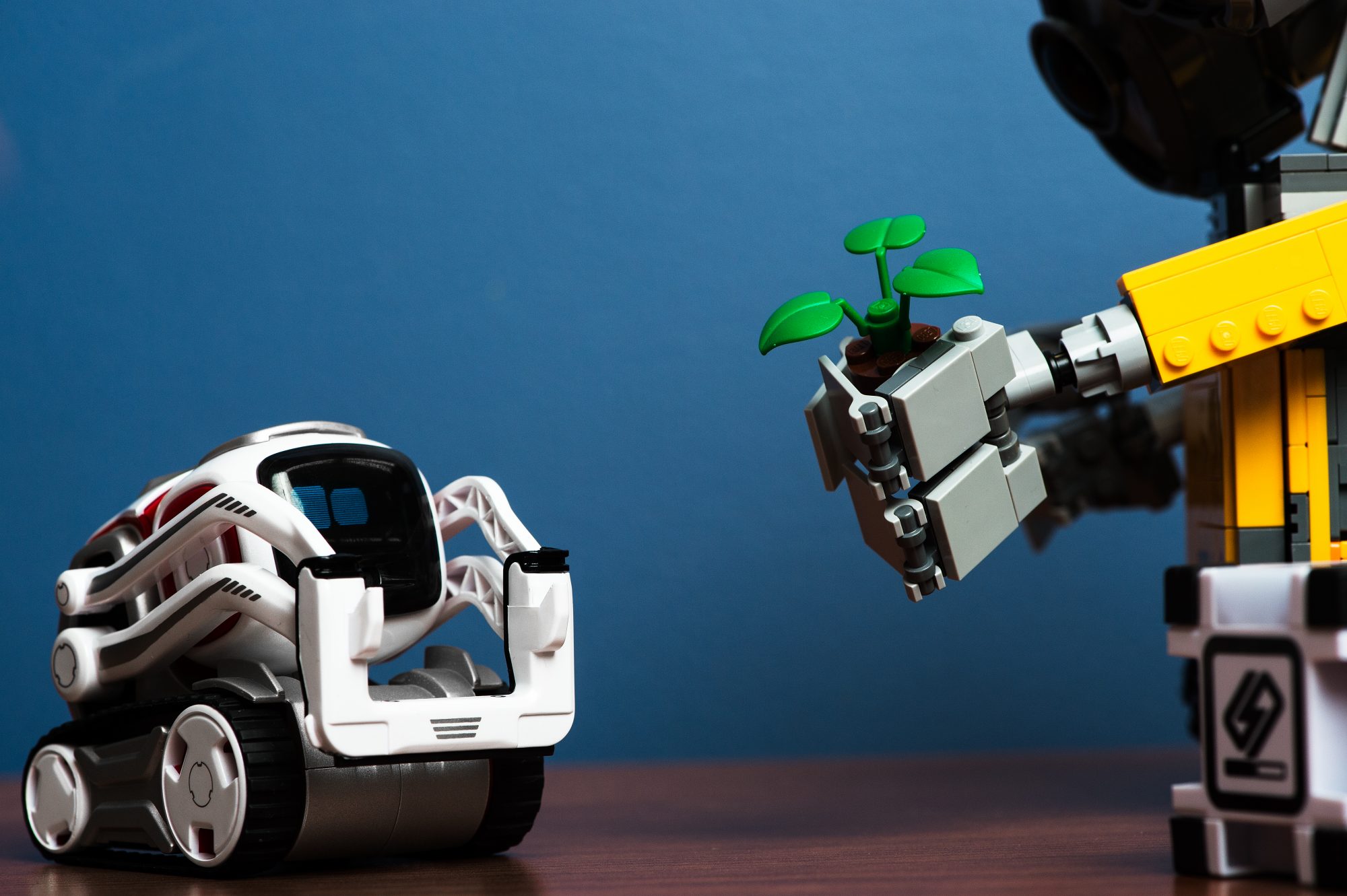

Social robotics will amplify this problem. “Because the robots are designed to hook us emotionally, to smile at us and make us feel good, it’s imperative that they’re trustworthy by design.”

Friday, December 16, 2016 in Engineering, Research, Technology

Share: Twitter, Facebook